The FinTech fork in the road: speed versus skepticism

Click. Approved. That’s the modern FinTech promise frictionless finance at the speed of a push notification. Critics worry about closed scoring systems, opaque models, lock-in, and appeal mazes. I’m unapologetically pro-AI: done right, FinTech AI expands access, reduces bias, and cuts fraud. The risks are solvable with design and governance, not reasons to slam the brakes. Research shows AI can improve fairness in lending when built with transparency and controls. Harvard Business Review

Thesis (Pro-AI): FinTech as an inclusion engine

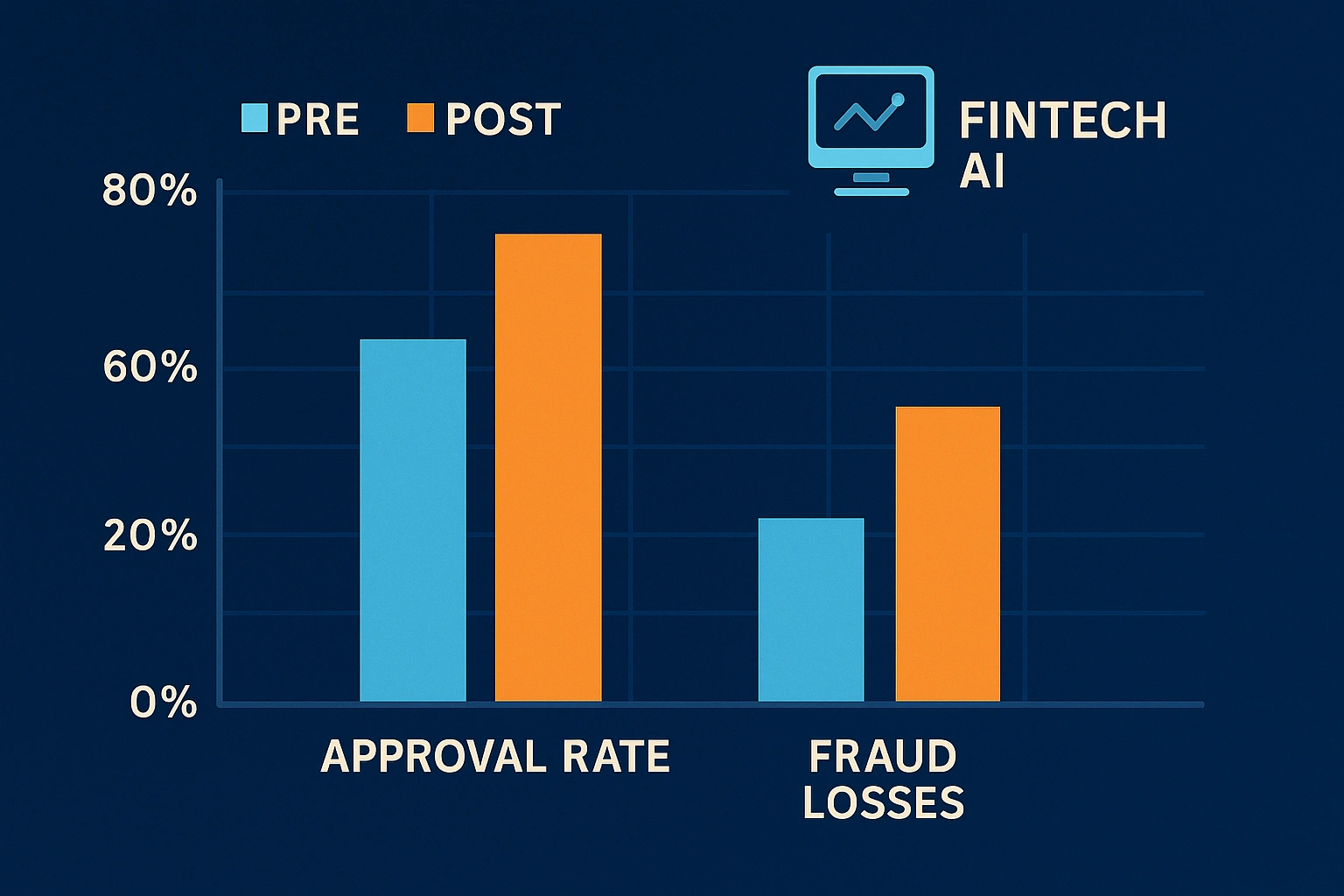

Legacy credit rules missed millions of “invisible primes.” FinTech models learn from cash-flow, open-banking feeds, and real-time behavior to approve more good customers—faster. This is how you get higher approval rates, fewer false declines, and happier users without torching risk.

Why pro-AI wins in FinTech:

- Speed: Millisecond decisions keep checkout flows smooth.

- Fairness: Well-designed models can reduce legacy bias in lending. Harvard Business Review

- Security: AI fights fraud at network scale; it spots rings rule engines miss. Forbes

Antithesis (the critique): FinTech as a walled garden

The critique isn’t imaginary: closed scoring systems can trap people. Scores may be non-portable, reasons vague, and appeal slow exactly how trust erodes. The Apple Card flare-up showed how quickly perceived unfairness explodes when explanations are thin (we unpack that history and lessons in your internal case study). H-in-Q |

But here’s the point: these problems are fixable with open standards, reason codes, portability, and independent audits. Don’t abandon FinTech AI; engineer it.

Inside the (not-so) black box: how FinTech AI really works

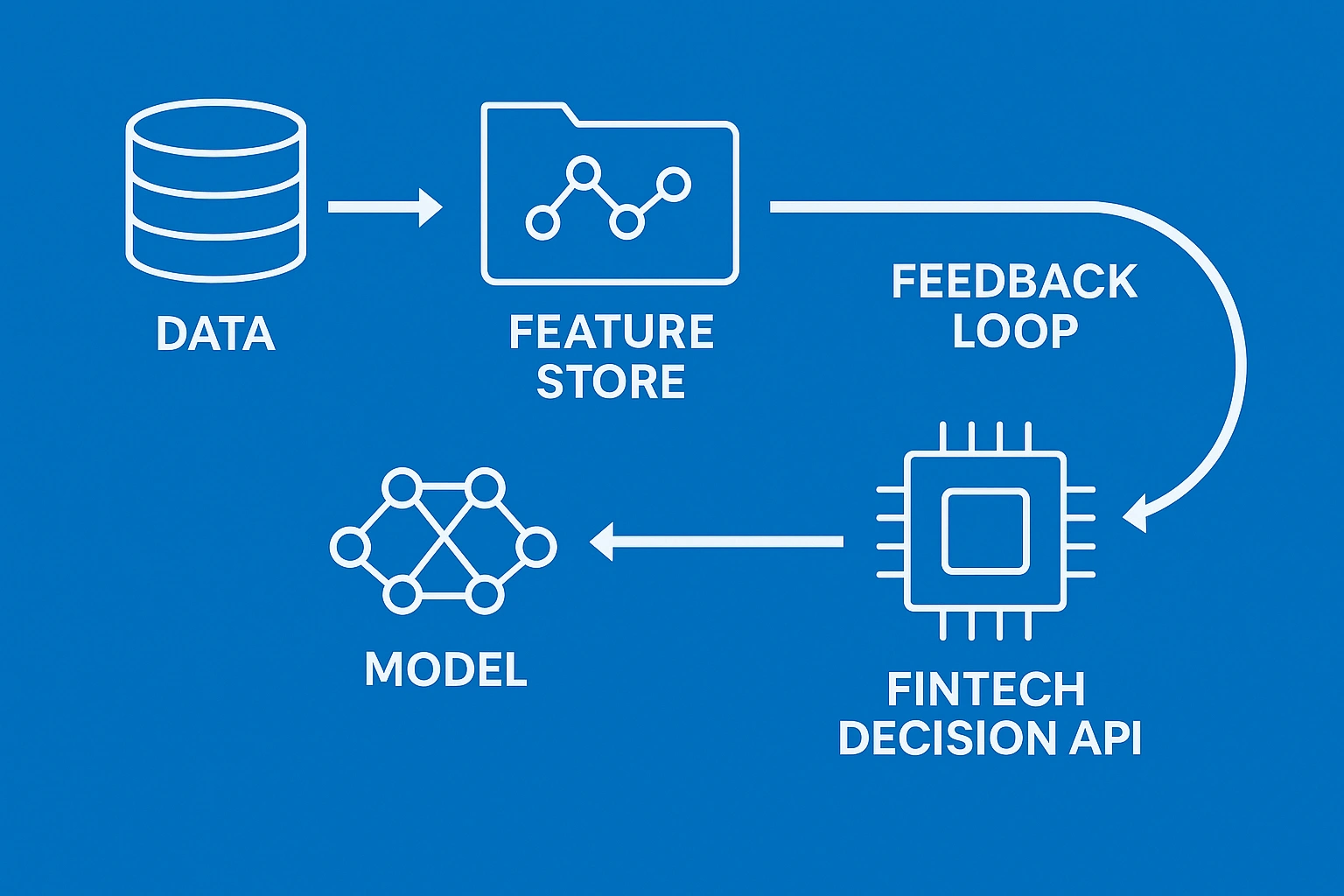

FinTech decisions travel a simple path:

- Data ingestion: transactions, open-banking feeds, device/behavioral risk signals (handled ethically).

- Feature store: reusable FinTech signals (e.g., cash-flow stability, merchant risk clusters).

- Models: gradient-boosted trees for credit, graph ML for fraud, LLMs for clear explanations.

- Decision API: low-latency calls embedded in apps and payment gateways.

- Monitoring: drift, stability, fairness, complaint signals, and real adverse-action reasons.

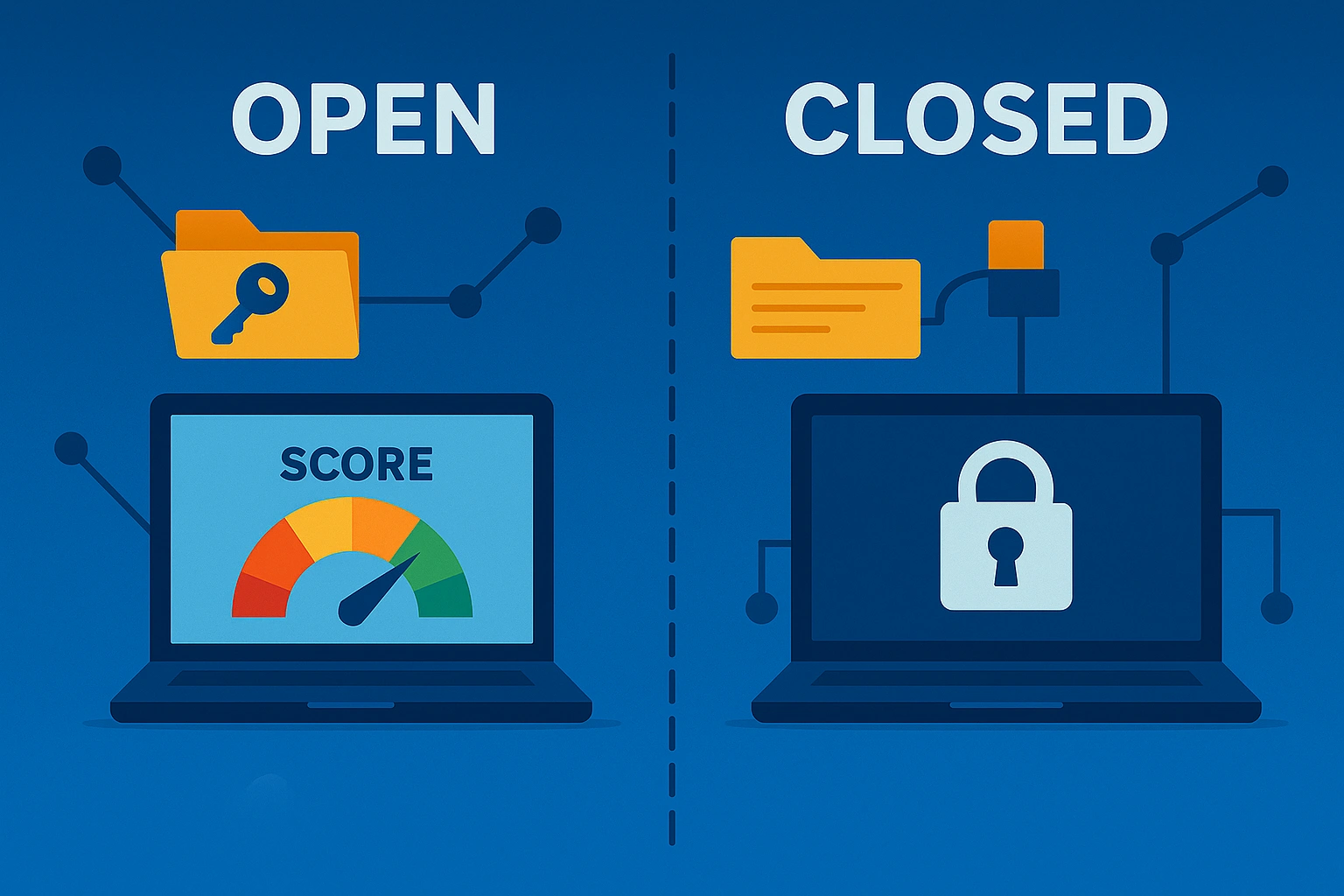

Open vs. closed scoring in FinTech (what leaders must demand)

| Dimension | Open scoring (good-practice FinTech) | Closed scoring (risk) |

| Explainability | Specific, human-readable reasons | Opaque rationales; generic codes |

| Portability | Scores/attributes transferable | Score locked to one ecosystem |

| Appeals | Documented, time-bound, tracked | Manual black box; unclear SLA |

| Vendor lock-in | Interoperable features | Proprietary features only |

| Regulatory fit | Audit trails + XAI | “We can’t explain it” posture |

Proof points: what research and regulators actually show

- Fairer loans (when designed right). Harvard Business Review explains how algorithmic design and transparency can make lending more fair than manual judgment. Harvard Business Review

- Fraud down, trust up. Forbes documents how FinTech teams use AI to cut fraud and false positives by reading graph-level patterns and behavior signals. Forbes

- Governance matters. An EY study found most enterprises saw initial losses from AI when controls were weak. Yet those advancing Responsible AI reported better business outcomes. Translation: guardrails aren’t bureaucracy; they’re ROI. Reuters+1

- Context risk: AI-boosted misinformation can accelerate deposit flight; financial firms should treat social signals as risk telemetry. (Design for resilience; don’t fear the tech.) Reuters

Trust by design (so FinTech stays frictionless and fair)

Being pro-AI doesn’t mean being naïve. It means engineering trust:

- Model inventory & lineage for every FinTech decision model.

- Explainability (XAI) with clear reason codes customers can understand.

- Fairness testing across protected groups, with remediation and documentation.

- Privacy-preserving ML (federated learning, differential privacy) so consortium learning doesn’t centralize sensitive data, practices encouraged by the World Economic Forum’s financial-services work. Initiatives WEF+1

- Human-in-the-loop for edge cases and policy-guarded overrides.

- Vendor diversity to avoid correlated failures across the FinTech stack.

Two playbooks (one clear winner)

Playbook A: Frictionless FinTech at scale (the pro-AI path)

- Target high-velocity journeys: onboarding, micro-credit, payment authorization.

- Optimize latency and recall with precision guardrails for fraud.

- Ship in-app reason codes (no jargon).

- KPIs: approval lift, false-decline drop, NPS/CSAT uplift, fraud-loss ratio down.

Playbook B: Open, contestable scoring (the critics’ ask, delivered by AI)

- Publish feature classes and stability ranges (not proprietary weights).

- Offer score portability so customers aren’t trapped in one FinTech garden.

- Provide appeal SLAs and downloadable audit logs.

- Commission independent audits; run challenger models annually.

Who’s affected and what to ask your FinTech vendor

AI specialists: Which features drive lift? What’s our drift alerting?

HR heads: Are any credit-like scores repurposed in hiring flows (they shouldn’t be)?

Marketers & researchers: How do real-time decisions affect LTV, churn, and CAC?

Leaders & CEOs: Can we prove decisions are fast, fair, and defensible?

Everyday users: What are my reasons? How do I appeal? Is my score portable?

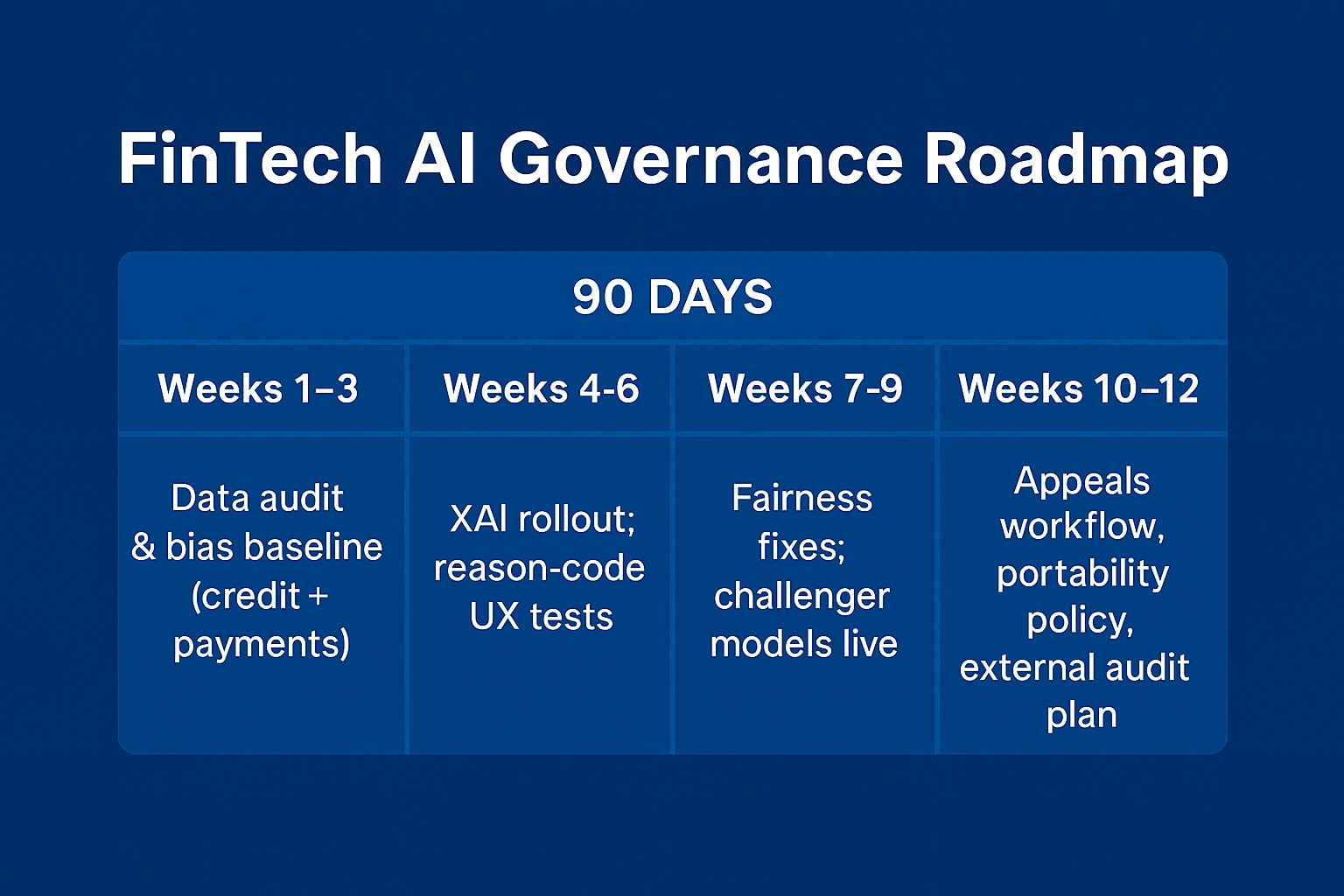

Conclusion: design the click

FinTech doesn’t have to choose between frictionless finance and closed scoring systems. The pro-AI path wins when we engineer openness: real reason codes, fairness audits, portability, and credible appeals. If FinTech is the future of access, its models must earn trust—in daylight, at real-time speed.

Can FinTech be both fast and fair?

Yes, FinTech AI can accelerate approvals and improve fairness when paired with reason codes, audits, and drift monitoring. HBR details how design choices matter. Harvard Business Review

What exactly is a “closed scoring system,” and why care?

It’s a proprietary model with limited explainability, portability, and appeal rights. It risks vendor lock-in and erodes trust. The fix: open standards, independent testing, and portability by design (principles echoed in WEF guidance). Initiatives WEF

Do AI fraud tools cause more false declines?

Not when tuned. Graph and behavioral models typically reduce false positives by understanding relationships that static rules miss, critical for FinTech checkout flows. Forbes

What metrics prove responsible FinTech AI?

Decision latency; appeal turnaround; reason-code clarity; fairness deltas; drift frequency; post-decision outcomes (e.g., default rates by cohort).

Should we slow down because of systemic-risk concerns?

No, harden the system. Monitor social chatter as risk telemetry, diversify models/providers, and stress-test liquidity. Reuters summarizes why vigilance not paralysis matters. Reuters

References

- AI-Driven Credit Scoring in FinTech: Access for the Underbanked — practical inclusion playbook. H-in-Q |

- Apple Card: Fairness & Transparency in FinTech — what went wrong, what to fix. H-in-Q |

- Hyper-Personalization with Analytics — how data and modeling shape outcomes and trust. H-in-Q |

- Harvard Business Review — AI Can Make Bank Loans More Fair (fairness in lending). Harvard Business Review

- World Economic Forum — AI in Financial Services + Responsible AI Playbook (governance patterns). Initiatives WEF+1

- Forbes — AI’s Growing Role in Financial Security and Fraud Prevention (payments & AML). Forbes

- Reuters (EY survey) — Most firms faced initial AI losses without controls; mature Responsible AI links to better outcomes. Reuters

- Reuters (UK study) — AI-generated misinformation raises bank-run risk; treat social signals as risk telemetry. Reuters