Compliance Reimagined: AI’s Disruption in Fin Tech Compliance

The rise of artificial intelligence within Fin Tech is not a cautious, incremental upgrade, it is a full‑scale transformation of how compliance operates in financial services. AI promises real‑time surveillance, fraud detection, automated reporting, and cost slashes, offering financial institutions the ability to meet regulatory obligations with unprecedented efficiency and speed. Yet this technological leap risks eroding core ethical and accountability frameworks. As Fin Tech firms rush to embrace AI’s advantages, they risk surrendering transparency, human oversight, and moral responsibility. The task ahead: leverage AI’s potential while defending the integrity of financial systems.

How Fin Tech Uses AI to Redefine Compliance Efficiency

Real-Time Monitoring and Precision Analytics

AI-driven systems enable Fin Tech companies to monitor transactions, user behavior, and risk signals in real time. Applications such as automated anti‑money laundering (AML) and fraud detection scan millions of data points per second, flagging suspicious patterns long before human analysts could. According to a comprehensive review, AI in banking and finance is revolutionizing fraud detection, credit risk analysis, and regulatory compliance; driving faster, more accurate decisions across complex data flows. (MDPI)

Traditional compliance models often rely on periodic audits and manual data sampling; AI flips that paradigm by delivering continuous oversight effectively obsoleting delayed, reactive compliance cycles.

Cost Reduction and Operational Speed

The pressure to reduce compliance costs while scaling services is existential for many financial institutions. AI delivers, reducing the burden of manual oversight, minimizing human error, and automating repetitive compliance tasks. According to a recent industry report, AI and blockchain adoption in regulatory technology is cutting compliance costs by up to 40% while preventing regulatory breaches before they occur. (fintechmagazine.com)

Cost Comparison : Traditional vs AI Compliance Systems

| Compliance Approach | Typical Annual Cost* | Typical Time-to-Flag Risk | False Positive Rate | Human Labor Required |

| Traditional Manual Compliance | High (baseline) | Days to weeks | Moderate to High | High (many analysts) |

| AI-driven Compliance (2025) | ~ 40% lower cost | Real-time / Seconds | Lower (with tuning) | Low (automated) |

*Cost includes staffing, audit overhead, and manual review cycles.

That cost and time advantage is a strategic wedge enabling Fin Tech firms to scale globally and handle compliance across jurisdictions, seamlessly accommodating high transaction volume; a capability near‑impossible under purely manual compliance regimes.

The Ethical Risks of AI-Driven Compliance in Fin Tech

Bias, Black Boxes, and Algorithmic Opacity

AI operates through complex models and machine-learning often opaque, inscrutable even to their developers. This “black box” problem becomes especially dangerous when compliance decisions; who gets flagged, blocked, or reported to regulators, hinge on AI judgments. Research in the financial sector warns that opacity undermines fairness, accountability, and trust when AI systems cannot explain why they made certain compliance decisions. (MDPI)

In practice, a user might be flagged for AML or fraud, but no human can meaningfully explain why, and no appeal mechanism may exist. That undermines due process and creates ethical blind spots.

Data Surveillance and Customer Privacy

AI compliance often involves deep surveillance of user behavior, transaction history, communication patterns, and metadata. The breadth and granularity of data processed can erode user privacy, effectively transforming Fin Tech platforms into monitoring machines. The more data is gathered to satisfy compliance, the higher the risk of misuse, leaks, or excessive profiling, especially in absence of stringent governance. (ey.com)

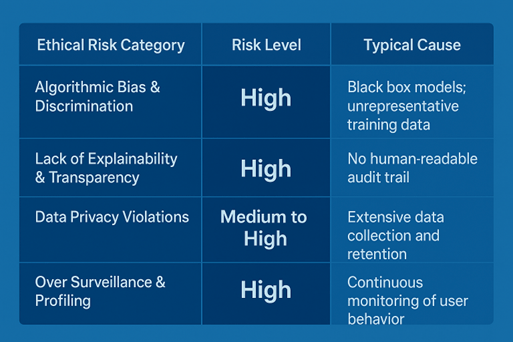

Ethical Risk Indicators in AI Compliance Platforms

These risks are often invisible to end users, who may never know why or how they were flagged, yet they bear the consequences: blocked transactions, frozen accounts, reputational damage.

Fintech and AI: Innovation Without Accountability?

Human Oversight is Being Phased Out

As AI compliance systems mature, many organizations reduce human oversight trusting in automation’s consistency, speed, and cost-effectiveness. That reduces accountability. A human auditor might weigh context, provide nuance, allow appeals; AI often does not. That shift turns compliance from a moderated safeguard to an automated black box.

Who Is Responsible for Wrong Decisions?

When AI wrongly flags legitimate activity or fails to catch illicit behavior, attribution becomes murky. The algorithm, the vendor, the internal compliance team, who’s responsible? The ambiguity undermines accountability and exposes firms to regulatory and reputational risk.

Human-led vs AI-led Compliance Audits: Accuracy & Accountability Comparison

| Audit Approach | Decision Transparency | Appeal Process | Speed | Accountability & Liability |

| Human-led Audit | High : full documentation | Established, human review | Slow | Clear : persons accountable |

| AI-led Audit | Low : algorithmic output only | Often nonexistent or opaque | Fast | Diffuse: unclear who bears responsibility |

The shift from transparent human processes to opaque automation introduces systemic risk: organizations lose the ability to meaningfully contest or understand compliance outcomes.

How Financial Technology Partners Influence AI Governance

The Role of RegTech Vendors

Many Fin Tech firms rely on external vendors “financial technology partners”, to supply AI compliance systems, rather than building them in-house. These RegTech vendors often provide turnkey AML, KYC (Know Your Customer), fraud detection, and reporting solutions, enabling rapid deployment without internal technical burden. That lowers barriers to AI adoption and accelerates compliance modernization. (ResearchGate)

Conflicts of Interest and Ethical Gaps

Yet relying on external vendors introduces tension: vendors prioritize product scalability and profitability, not necessarily transparency or accountability. Their business incentives may conflict with rigorous ethical standards. Without independent oversight, vendor-supplied AI might remain opaque, unaudited, and unchallengeable.

Top 5 Governance Risks When Outsourcing AI Compliance to Technology Partners

- Loss of transparency : algorithms remain proprietary and unreviewed.

- Reduced accountability : vendor shifts blame in case of failure.

- Incentive misalignment : vendor profit may outweigh compliance rigor.

- Vendor lock-in : firms become dependent on opaque systems.

- Regulatory risk : misconduct or failure may trigger legal exposure without clear liability.

Outsourcing compliance to AI vendors may accelerate deployment, but it also dilutes institutional control and oversight, a trade‑off that may undermine long-term trust in financial institutions.

Global Regulatory Lag in the Face of Fin Tech and AI Innovation

Disjointed Frameworks and National Standards

Regulators globally are struggling to keep pace with the rapid adoption of AI in financial services. Different jurisdictions implement divergent rules; some focus on data protection, others on algorithmic transparency or AML compliance. A recent scholarly review found that although AI in finance is growing rapidly, regulation remains patchy and inconsistent across countries. (arxiv.org)

Some regions are beginning to act: regulatory agencies are issuing guidelines demanding transparency and board‑level responsibility when firms use AI. But enforcement remains uneven and rarely swift enough to match technological deployment. (regulationtomorrow.com)

Why Regulation Struggles to Keep Up

The root problem: innovation outpaces legislation. AI systems evolve fast; regulators, by design, move slowly. Moreover, lawmakers may lack the technical expertise to draft effective rules, and existing legal frameworks were built for human decision‑makers. This regulatory gap leaves room for misuse, unintended consequences, and systemic risk.

Global Regulatory Readiness for AI in Financial Compliance

| Region / Jurisdiction | AI Regulatory Frameworks | Transparency Requirements | Enforcement Activity | Readiness Level (Low/Medium/High) |

| European Union | Emerging regulations, AI laws in development | Moderate : some transparency mandates | Moderate | Medium |

| United States | Sector-by-sector oversight; guidance from regulators like NYDFS | Low : limited transparency mandates | Low to Medium | Low–Medium |

| Asia / Emerging Markets | Mixed; patchy adoption of AI rules | Varies by country | Low | Low |

| Global / Multinational Firms | Internal governance efforts; reliance on vendors | Inconsistent | Low | Low–Medium |

The uneven regulatory landscape means that firms operating across borders may exploit gaps, raising systemic risk and undermining the promise of responsible AI adoption.

Strategic Advantages vs Long-Term Risk in AI-Powered Compliance

Short-Term Wins: Efficiency, Accuracy, and Profit

For many Fin Tech firms, the appeal of AI compliance is undeniable. Faster onboarding, reduced fraud, fewer false positives, and lower operating costs lead directly to stronger bottom lines. Investors, seeing compliance as a bottleneck to scaling, increasingly value firms that embed AI; offering higher valuations and faster growth trajectories. AI-driven compliance becomes a strategic enabler rather than just a cost center. (SmartDev)

Long-Term Liabilities: Black Swans and Systemic Risk

But the long term may hold severe downside. Overreliance on opaque AI systems may hide systemic risks: widespread false positives, unexplained denials of service, reputational damage, or large-scale failures. When compliance becomes automated and inscrutable, institutions lose the ability to audit decisions, defend actions, or maintain public trust especially in case of error or scandal.

Four Emerging Strategic Risks in AI-driven Fin Tech Compliance

- Regulatory backlash : governments may impose hard restrictions or fines after AI-induced failures.

- Market trust erosion : customers and partners may abandon platforms after unexplained account freezes or rejections.

- Systemic instability : errors in AI compliance could cascade across institutions, harming market integrity.

- Legal liability ambiguity : firms may find themselves vulnerable without clear responsibility frameworks.

The Liability Dilemma: When AI Compliance Systems Fail

Who Gets Sued When AI Gets It Wrong?

If an AI compliance system wrongly flags a legitimate customer or fails to detect illicit behavior, who is liable? The vendor that built the model, the Fin Tech firm that deployed it, the compliance officer who signed off, or nobody? Current legal frameworks struggle to assign responsibility clearly. As one recent regulatory review argues, autonomous decision-making shifts liability into a gray zone. (arxiv.org)

Without precise liability rules or transparency requirements, victims of algorithmic errors may have no recourse. That undermines trust and exposes institutions to regulatory and reputational punishment with little chance of defense.

The Insurance Market’s Slow Adaptation

Insurance, often the safety net for compliance failure or regulatory fines, is scrambling to catch up. Traditional policies do not anticipate algorithmic risk, explainability failures, or systemic AI-induced errors; leaving firms exposed. The slow adaptation of insurance frameworks to AI risk is another indicator that current governance is ill-prepared for the rise of autonomous compliance.

Trends in AI Compliance Failure Litigation and Claims

As the number of AI-driven compliance systems increases, the likelihood of failures and their consequences escalates. Without robust liability frameworks and insurance adaptation, the financial system may inherit hidden risks that surface only after damage is done.

Ethics vs Innovation in AI-Powered Fin Tech

AI in Fin Tech compliance delivers undeniable advantages: automation, speed, cost savings, real-time monitoring, and the ability to scale across complex global operations. These gains transform compliance from a regulatory burden into a strategic asset. However, this transformation comes at a steep ethical and systemic price. Opaque algorithms, diminished human oversight, data surveillance, and murky liability expose financial institutions and their customers to significant risks.

Fin Tech must champion ethical AI design, transparent governance, and accountability frameworks. Innovation should not override integrity. The future belongs to financial firms that do not merely adopt AI for compliance but embed explainability, fairness, and trust as core pillars of that adoption.

AI is not the enemy; unethical, unregulated AI is. Fin Tech must choose between short-term convenience and long-term credibility.

References :

- The Role of Artificial Intelligence in Navigating Regulatory Compliance in Fintech

- How Artificial Intelligence is Reshaping the Financial Services Industry – EY

- AI Regulation in Financial Services: FCA Developments and Emerging Enforcement Risks – Regulation Tomorrow

- How AI is Revolutionising RegTech and Compliance – FinTech Magazine

- AI in Finance: Top Use Cases and Real-World Applications – SmartDev

- AI and Compliance in Banking and Financial Services – MDPI

- AI in Finance: Policy Challenges and Regulatory Responses – arXiv

- ai-fraud-detection-fintech-privacy-vs-cybercrime- H-in-Q